AI Compilers - A Study guide

The following formulates a study guide for learning about AI compilers and systematically understanding the inner functions. These are targetted more towards Model and Framework level developers to build a deeper understanding of compiler functions.

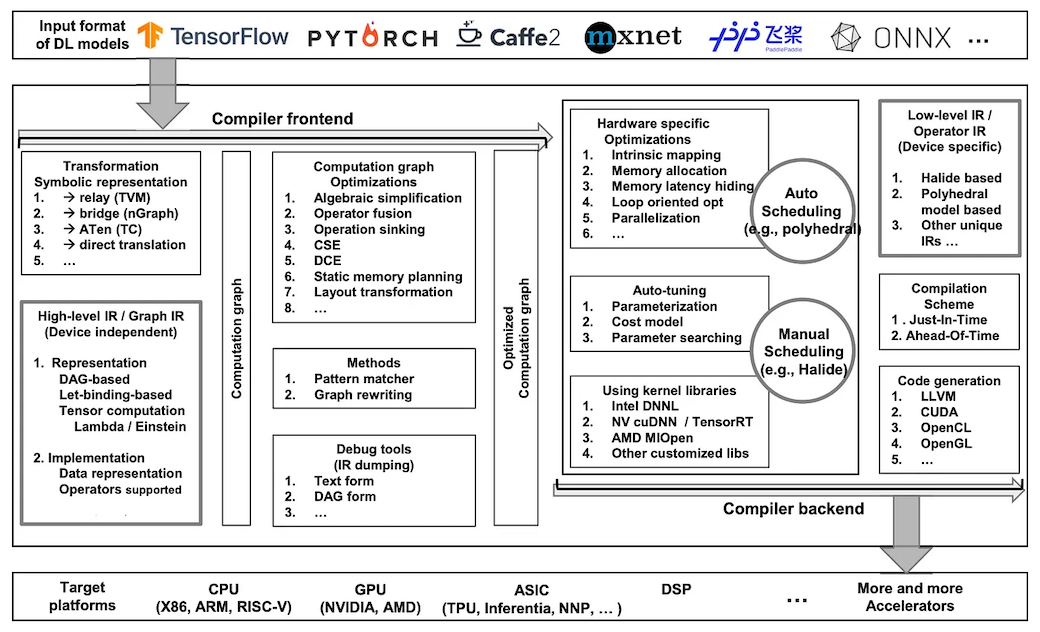

AI Compilers Demystified - An Introduction

Medium Article is a good high level summary of where AI compiler interacts with the frameworks and no learning about ML Accelerators is complete without learning about Systolic Arrays.

Ref: https://arxiv.org/abs/2002.03794

Ref: https://arxiv.org/abs/2002.03794

ML Systems for TinyML

HW Acceleration provides an excellent end to end context on why ML compilers are necessary for compute optimization and the evolution of TPUs to ASICs. CS249R is a good course for edgeML learning, but necessarily for compilers only.

Glow: Graph Lowering Compiler Techniques for Neural Networks

The Glow paper discusses what is compilation and how optimized code is generated for different types of hardware and what IR (Intermediate representations) are. Though its outdated and replaced with TorchDynamo and TorchInductor, this paper sets up a good fundamental framework for understanding the inner mechanisms.

Textbook - Deeplearningsystems.ai

A no-nonsense rich end-to-end textbook to understand the fundamentals of DL algorithms (Chapters 1-6) and Hardware and compiler level optimizations for these algorithms (Chapters 7-9)

A simple compiler Example

Build a Hardware Compiler for Machine Learning and Image Processing walks through in 10 minutes the concepts of building an Hardware accelerator for Image processing and ML algorithms using frameworks like Halide and others. The goal is to convert architecture-agnostic algorithm descriptions into hardware accelerators. It emphasizes using High-Level Synthesis (HLS) as a backend instead of directly targeting Verilog or VHDL. HLS allows compilers to emit HLS C++ code with directives, enhancing productivity despite potentially sacrificing some control over optimization. It goes into some of the design choices of handling optimizations affecting program semantics (e.g., quantization, bit width tuning) at the frontend which ensures optimizations directly impacting program outputs are addressed early in the compilation process. They utilize “for loops” as an intermediate representation and as a target output for HLS. Frameworks like TensorFlow, PyTorch, Halide, and TVM naturally express algorithms as dense linear algebra operations, simplifying translation to HLS-compatible constructs. They discuss loop transformations (e.g., fusion, unrolling) and memory optimizations (e.g., banking).

A day in the life of a Compiler Engineer

Torchdynamo deep dive gives the viewer an idea on what issues does a Framework and compiler engineer faces during their development cycle. Edward from the PyTorch team discuss TorchDynamo, graph capture part of the torch.compile ecosystem which aims to capture Python code efficiently by capturing operations in a format suitable for compilation. TorchDynamo intercepts Python bytecode execution to generate and optimize computation graphs.He deep dives into bailouts in deep learning compilers and optimizations in symbolic evaluation during graph capture.

Platforms for AI accelerator design

A concept platform for designing and evaluating ML Accelerators - Gemmini | Tutorial. An interesting read to understand what bottlenecks usually occur in accelerator design and challenges in designing generalized hardware.

References for further readings/courses

- Textbook: Efficient Processing of Deep Neural Networks by Vivienne Sze, Yu-Hsin Chen, Tien-Ju Yang and Joel S. Emer

- A survey of papers and books about ML Accelerators

- EE290-2 - Hardware for ML from Berkeley

- ECE 5545: Machine Learning Hardware and Systems - Well detailed course for ML HW and Systems design with Course Videos

- C4ML Course

- WashU-cse548

- IOC5009 - Accelerator Architectures for Machine Learning

Other Compiler implementations

If you found this useful, please cite this post using

Senthilkumar Gopal. (Dec 2023). AI Compilers - A Study guide. sengopal.me. https://sengopal.me/posts/ai-compilers-a-study-guide

or

@article{gopal2023aicompilersastudyguide,

title = {AI Compilers - A Study guide},

author = {Senthilkumar Gopal},

journal = {sengopal.me},

year = {2023},

month = {Dec},

url = {https://sengopal.me/posts/ai-compilers-a-study-guide}

}